Timeline

16 Weeks

Role

UX Design

Team

2 UX Designers

1 Developer

1 Product Manager

Tools

Figma

Platform

Windows OS

Contribution

Digital Ethnography

Concept Ideation

Prototyping

Project Overview

Voice Access is a Windows OS speech-based accessibility feature for users with mobility challenges. This project extends its capabilities to better support individuals with Tourette Syndrome, addressing both mobility and speech impairments.

Our team aimed to make digital features more inclusive for users with accessibility needs, while also identifying opportunities to expand Microsoft’s reach and impact.

Voice Access is a speech accessibility tool designed specifically to support individuals with mobility impairments, enhancing hands-free interaction.

But how does it concern a user with Tourette Syndrome?

Well, they struggle with both!

Involuntary Speech

30% of individuals with Tourette

Syndrome exhibit involuntary speech.

Mobility Impairments

86% of individuals with Tourette Syndrome experience motor tic.

This makes voice tools often misinterpret involuntary speech and don’t account for mobility impairments, making them hard to use.

But how did we come to this conclusion?

It all started with a video we stumbled upon, on the internet, of how users with Tourette Syndrome speak!

To understand the struggles of users with TS, we conducted digital ethnography study to find out their pain points.

We created a discussion post and analyzed over 20 data points across the internet, and using thematic analysis we uncovered recurring challenges.

Loss of

Speech Control

Users felt a lack of control over what the voice tool processed, often due to unintended commands.

Ambiguous Feedback

Users weren’t clearly informed when something went wrong, which led to confusion.

Lack of Customization

Every user had different types and intensities of tics, triggered by varying situations, which made a one-size-fits-all solution ineffective.

But there were two major oversights!

Category Mismatch

We compared Voice Access to Alexa and Siri, when they serve different purposes. While the two managed tasks, Voice Access enabled full system navigation without touch.

Ignored User Reality

We focused on Voice Access’s performance without asking if users with Tourette Syndrome were even using it, leaving a gap in understanding real-world behavior.

That’s when we expanded our research and found Dragon NaturallySpeaking,

a professional speech tool widely used by the TS community.

We found that it had 82% accuracy compared to Voice Access’s 64% accuracy,

yet remains inaccessible to many due to key barriers.

Cost as a Barrier

The $700 license makes the tool out of reach for many users who need it most.

Setup

Friction

Manual installation adds unnecessary complexity, especially compared to built-in alternatives.

Equipment Dependant

Optimal use depends on a dedicated microphone, raising both cost and setup effort.

With all these pain points in mind, it became clear that Voice Access

could benefit from a redesign.

Problem Statement

How might we create a more adaptive voice access system that better understands diverse speech patterns, improves feedback, and supports user customization?

Here is how voice access works for:

How voice access processes commands from users without TS

Documents Folder

Open my documents folder and open image 242

Working on it

Users without TS

How voice access processes commands from users with TS

Open my doc…..doc…doc…kuments folder and opp….open****

Open my doc….****fol…fuu.****……..****** folder

Open my doc….***. Unable to find Open my doc….Try saying "Open my…..

Users with TS

This led to a comparative journey mapping across two user groups, highlighting key areas for design intervention.

Voice Access Active

system processes command and showcases visual acknowledgement

Voice Access executes command

“open downloads folder

& open image 402”

User without TS

Voice Access Active

system can’t process command and showcases ambiguous acknowledgement

Voice Access unable to execute command

“open downloads.....

fold...fold...****”

User with TS

How might we allow TS users to have autonomy over their commands?

How might we make voice access more customizable to TS users based on severity?

Rapid ideation through Crazy 8’s

Iteration 1

Explored using underlines and dashed lines to distinguish recognized vs. unrecognized words.

Risk - Could confuse users and lead to unnecessary repetition.

Iteration 2

Proposed a complete interface overhaul to allow direct text editing.

Risk - Might alienate current Voice Access users familiar with the existing structure.

Iteration 3

Considered highlighting possible tics for users to manually delete.

Risk - Increased cognitive load, reducing overall usability.

Iteration 4

Introduced the idea of a verbal cue like “over” to signal command completion.

Benefit - Provides a clear, low-effort end signal for input processing.

We merged the strongest ideas into a cohesive solution using a Frankenstein-style synthesis.

Feature 1 - Modified EoU Detection

Users can set a custom 'End of Utterance' command, like "done" or "that’s it", to clearly signal when their input is complete.

This puts them in control of when Voice Access should begin processing, reducing accidental triggers. Similar cues are used in tools like Google Assistant to improve accuracy and intent recognition.

Modified EoU Detection

And if there is an error

OR

Open downloads folder and view the images present. Finish!

Voice Access Wake Up! Open downloads folder and view the images present. Finish

Opening goo...goo.. google chrome and **** and search a box of potatoes.

Opening google chrome and and search a box of potatoes.

Open goo...goo.. google chrome and **** and search a box of potatoes

Working on it......

Executing.....

Feature 2 - Adaptive Speech Processing

Adaptive Speech Processing, uses a federated LLM to learn from individual TS speech patterns directly on the user’s device, preserving privacy while improving accuracy. It adapts to how each person communicates and remains entirely optional, with users retaining full control through a simple toggle in settings.

Adaptive Speech Processing

Say “turn off microphone” or long press the mic button to turn off the mic.

Listening....

Select default microphone

Enable Speech Learning

Manage options

Languages

Turn off voice access

Turn on speech learning

Turn off speech learning

A Quick Cognitive Walkthrough revealed:

Anxiety-Inducing Micro-Interactions

Subtle UI behaviors unintentionally heightened user frustration, increasing drop-off and even triggering tics under stress.

Unclear Error Recovery

Generic error messages lacked actionable guidance, making it difficult for users to understand or recover from missteps.

Harsh Visual Feedback

Harsh visual cues, especially red error highlights, negatively impacted user confidence and emotional comfort.

The Impact

These insights led us to reassess key interactions.

We made error messages more actionable, prompting users to repeat commands or flagging background noise, and made visual error cues optional, defaulting to off to reduce emotional friction.

However, we paused finalizing the solution, prioritizing real user feedback to ground future iterations to create more authentic experiences.

Around the same time, we found an article about major tech firms and UIUC improving voice recognition for underrepresented speech patterns, reinforcing the relevance of our work.

View other projects

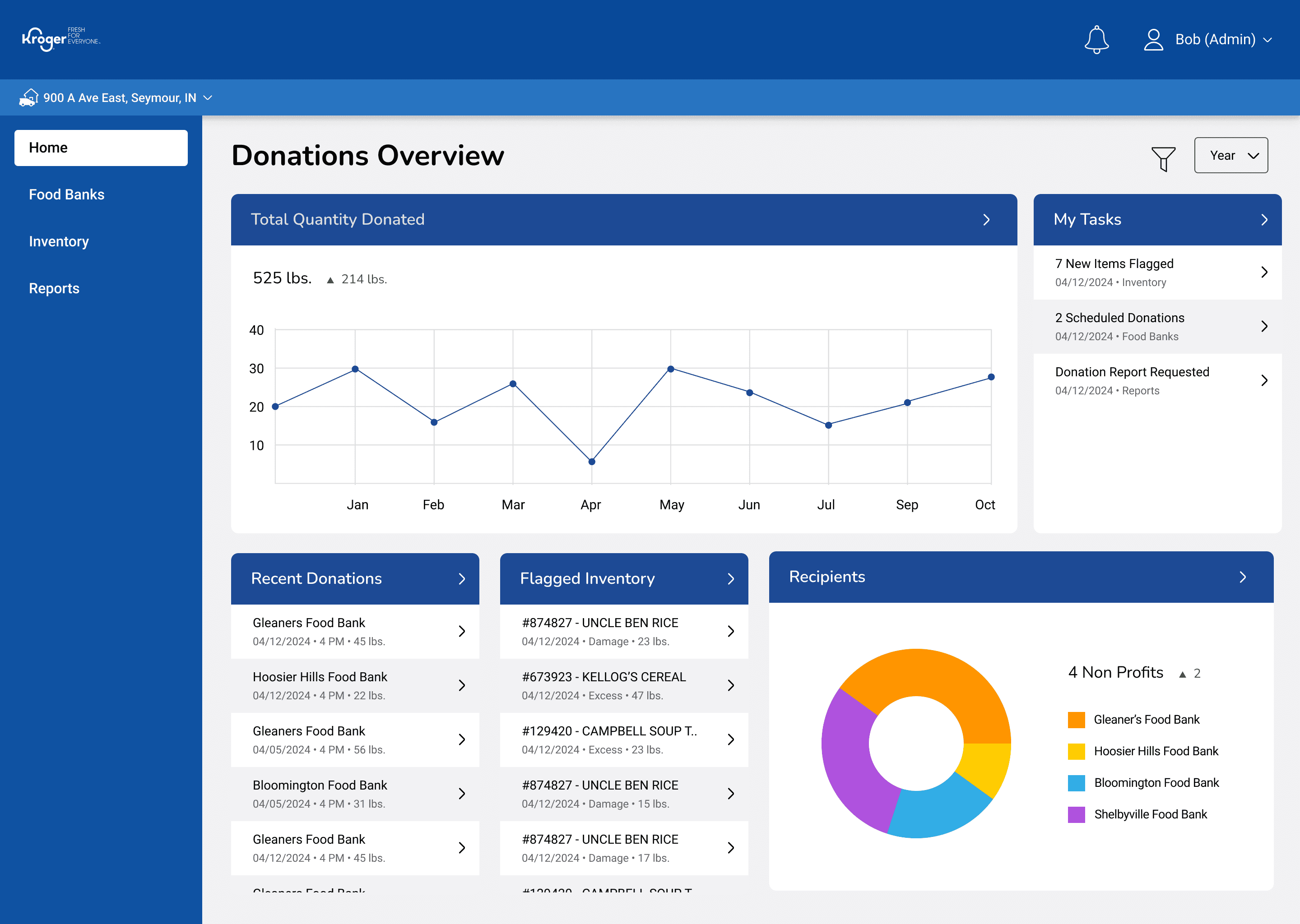

Streamlining Kroger's Food Donation Management

SUPPLY CHAIN | ECO HACKATHON

Optimized navigation and analytics for Jetsweat Fitness

B2B SAAS | DESIGN SYSTEM | SUMMER 24'

Shipped

Enhancing grocery

shopping with a Retail Buddy

USER EXPERIENCE | PROTOTYPING